Using a Maximum Entropy Classifier to link “good” corpus examples to dictionary senses

Authors: Alexander Geyken, Christian Pölitz, Thomas Bartz

Abstract:

A particular problem of maintaining dictionaries consists of replacing outdated example sentences by corpus examples that are up-to-date. Extraction methods such as the good example finder (GDEX; Kilgarriff, 2008) have been developed to tackle this problem. We extend GDEX to polysemous entries by applying machine learning techniques in order to map the example sentences to the appropriate dictionary senses. The idea is to enrich our knowledge base by computing the set of all collocations and to use a maximum entropy classifier (MEC; Nigam, 1999) to learn the correct mapping between corpus sentence and its correct dictionary sense. Our method is based on hand labeled sense annotations. Results reveal an accuracy of 49.16% (MEC) which is significantly better than the Lesk algorithm (31.17%).

Keywords: WSD; maximum entropy; collocations; legacy dictionaries; example sentences

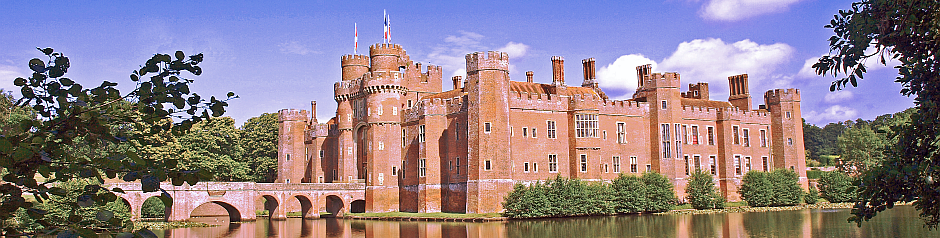

Reference: In Kosem, I., Jakubiček, M., Kallas, J., Krek, S. (eds.) Electronic lexicography in the 21st century: linking lexical data in the digital age. Proceedings of the eLex 2015 conference, 11-13 August 2015, Herstmonceux Castle, United Kingdom. Ljubljana/Brighton: Trojina, Institute for Applied Slovene Studies/Lexical Computing Ltd., pp. 304-314.

URL: https://elex.link/elex2015/proceedings/eLex_2015_19_Geyken+Politz+Bartz.pdf

Published: 2015